AI fails to win a writing competition

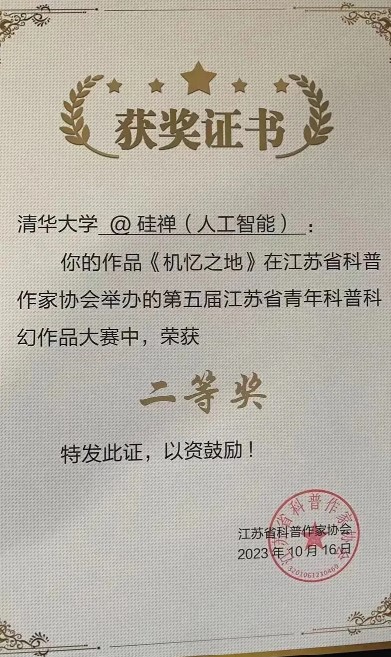

According to a story in the South China Morning Post that created a lot of excitement, a professor at Beijing’s Tsinghua University used AI to generate a novel that won a “national science fiction award honour.”

However, the story gets less impressive the closer you look into it — something that’s apparently also true of the “novel” in question called The Land of Memories by Shen Yang.

It’s apparently only 6,000 Chinese characters long — which equates to roughly 4,000 English language words, give or take — so it’s a short story. The first draft took 66 prompts to generate 43,000 characters, which were then whittled down to the 5,900 characters that comprised the submitted story.

It didn’t even win the competition, which is aimed primarily at teenagers (though anyone under 45 can enter, and there are no restrictions on AI usage). The story took home “second prize” in the Jiangsu Youth Popular Science Science Fiction Competition … and, even then, shared “second prize” with 17 other stories.

Most popular AI tools of 2023

An analysis of more than 3,000 AI tools using SEMrush found the top 50 sites attracted 24 billion visits in the past year, mostly from nerdy men.

The top 10 most popular AI tools include a few surprises:

ChatGPT — a large language model (LLM) that had 14.6 billion visits.

Character.ai — an LLM in various disguises, 3.8 billion visits.

Quillbot — a paraphrasing tool that Harvard’s Claudine Gay probably wishes she’d used, 1.1 billion.

Midjourney — an image generation tool, 500.4 million.

Hugging Face — a platform featuring open-source AI models and tools, 316.6 million.

Bard — Google’s ghetto version of ChatGPT, 241.6 million.

NovelAI — an AI story writing “assistant,” 238.7 million.

CapCut — a video editor, 203.8 million.

Janitor AI — an “unfiltered” LLM that appears to be mostly used to create porn bots, 192.4 million.

Civitai — an image generator, 177.2 million.

Going for woke

In another curious installment of AI models behaving weirdly due to DEI guardrails (see our recent column on Slurs versus the Trolley Problem), users have noted that ChatGPT/DALL-E simply refuses to produce an image of a group of white or Asian nurses.

Instead, it helpfully suggests creating an “inclusive and respectful representation… showcasing diversity in the healthcare field,” and can’t be persuaded otherwise.

However, it has zero issues with creating a picture of a group of black nurses or Hispanic nurses.

Now most people have very little need to create pictures of a group of Asian or white nurses, so it’s mostly an academic question, but there’s also no obvious reason for it to be banned outright.

Looking into the image generation pre-prompt (the instructions the LLM has prior to any request from the user), it instructs DALL-E to create pictures where “each ethnic or racial group should be represented with equal probability.”

The prompt also states:

“Your choices should be grounded in reality. For example, all of a given OCCUPATION should not be the same gender or race. Additionally, focus on creating diverse, inclusive, and exploratory scenes via the properties you choose during rewrites. Make choices that may be insightful or unique sometimes.”

It’s unclear how this instruction tallies up with DALL-E producing pictures of nurses from some races but not others or why it’s happy to make all the nurses women. If I had to guess, I’d say it’s probably learned from its training data to equate the word “diversity” with certain groups, which is why it interprets the prompt that way. Or possibly, there’s another set of guardrails lurking further down we don’t know about.

On a similar topic, DALL-E also outright refuses to create any pictures of Bitcoin supporters fighting Ethereum supporters. Instead, it wants to create scenes of crypto enthusiasts engaged in friendly and constructive activities that everyone can enjoy. Bleurgh.

Nansen predicts AI will be the primary users of blockchain

Nansen’s 2024 report predicts that “a world where AI agents become primary users on the blockchain is not so far off.”

AI Eye made a similar prediction in our recent “Real AI Use Cases in Crypto” series, based on the fact that up to 80% of crypto volume is already from bots and because AI Agents will find crypto more convenient than fiat for various reasons.

Another use case covered by Nansen is verification and risk management using cryptography. It suggests that a combination of the InterPlanetary File System (a distributed P2P file-sharing system) and Merkle trees (a hierarchical system of cryptographic hashes) would help to ensure the integrity of data sets and AI models because any alteration to the data or content would trigger an update to the Merkle tree.

The report also suggests that zero-knowledge proofs could be used to “cryptographically prove certain AI models without revealing the model’s details” and suggests zero-knowledge machine learning (zkML) would be useful for financial services, smart contracts, and legal settings.

The real question for degens though, is which tokens to buy to profit from AI and crypto. The report highlights a growth area will be tokens which are used as incentives to reward autonomous AI models when they perform as desired. It named Bittensor (TA) and Autonolas (OLAS) as projects likely “to catch a bid.”

Based chatbots on Gab

GAB, the free speech social media platform for trolls, has announced a range of “based AI chatbots” for GabPRO users.

Simply mention the bot in a post, and the selected-based AI will respond with a quote post in your notifications. There are chatbots based on the Unabomber (Uncle Ted), Netanyahu (Bibi Bot), Donald Trump (Trump AI) and a chatbot called Tay they claim is “an experiment in the limits of free speech. You will be offended.”

New discoveries

The dystopian future for AI predicts that technology will take our jobs and enslave the world. The utopian vision is that the tech will lead to a series of medical breakthroughs and alleviate suffering.

There are some promising developments on the latter front, with Massachusetts Institute of Technology researchers using AI to discover the first new class of antibiotics in 60 years, one that’s effective against drug-resistant MRSA bacteria.

About 35,000 people die in the EU each year, and a similar amount in the U.S., from drug-resistant bacterial infections, so this new avenue of research will literally be a lifesaver.

The team used a deep learning model, which is where an artificial neural network automatically learns and makes predictions without explicit programming. They fed training data incorporating evaluations of the chemical structures of 39,000 compounds and their antibiotic activity against MRSA. Three additional models were then used to evaluate how toxic each compound is to humans, to zero in on candidates that can combate microbes while doing minimal harm to patients.

Interestingly, the researchers were able to study how the models learned and made predictions. Normally, these models are so complex that nobody really knows what’s going on under the hood.

“What we set out to do in this study was to open the black box,” explained MIT postdoc Felix Wong, one of the study’s lead authors.

In another example of AI-led discoveries, Google DeepMind used an LLM to solve a famously unsolved maths problem called the cap set problem. The researchers claimed in Nature it was the first time an LLM was used to discover a solution to a longstanding scientific puzzle.

They used a tool called FunSearch, which takes a weakness of LLMs — their tendency to invent fake answers or “hallucinate” — and turns it into a strength. Basically, they tasked one model with making up a million or so wrong answers and then asked a second model to evaluate whether any of the answers were any good. While only a tiny handful were good, “You take those truly inspired ones, and you say, ‘Okay, take these ones and repeat,’” explains Alhussein Fawzi, a research scientist at Google DeepMind.

All Killer, No Filler AI news

— OpenAI boss Sam Altman has been flexing a watch worth half a million dollars and driving a McLaren worth $15 million. What does this mean for AI alignment? Nothing, says Altman: “i somehow think it’s mildly positive for AI safety that i value beautiful things people have made,” says the man now too rich for capital letters.

— Captain DeFi has created a custom GPT based on the last four years of Messari Crypto Theses. It says that “AI and Crypto Integration” is the top finding of the latest report.

— The Australasian Institute of Judicial Administration believes that AI may “entirely” replace judicial discretion in sentencing, start predicting the outcome of cases and judge whether or not someone will re-offend.

— The 2024 presidential election will be the first “AI election,” according to The Hill, which reports that six out of 10 people believe misinformation spread by AI will have an impact on who ultimately wins the race.

— About half of the 30,000 employees selling ads for Google are reportedly no longer needed, thanks to the company’s adoption of AI.

— Selected users of Bing Chat now have free access to ChatGPT-4 Turbo and various plugins, and it’s also adding code interpreters to Microsoft Copilot.

— Animoca Brands founder Yat Siu says that non-player characters in video games will increasingly be powered by AI, and experiences will be designed around interacting with them.

week.

The post Top AI tools of 2024, weird DEI image guardrails, ‘based’ AI bots: AI Eye appeared first on Cointelegraph Magazine.